"A soft or confidential tone of voice" is what most people will answer when asked what "whisper" is. Due to the huge hype around ChatGPT and DALL-E 2 this past year, all other OpenAI releases remained out of the spotlight, among which stands the "Whisper" — an automatic speech recognition system that can transcribe any audio file in around 100 languages of the world and if needed translate it into English.

In order to better grasp the idea behind the Whisper and its potential usage, let's start by defining its tasks.

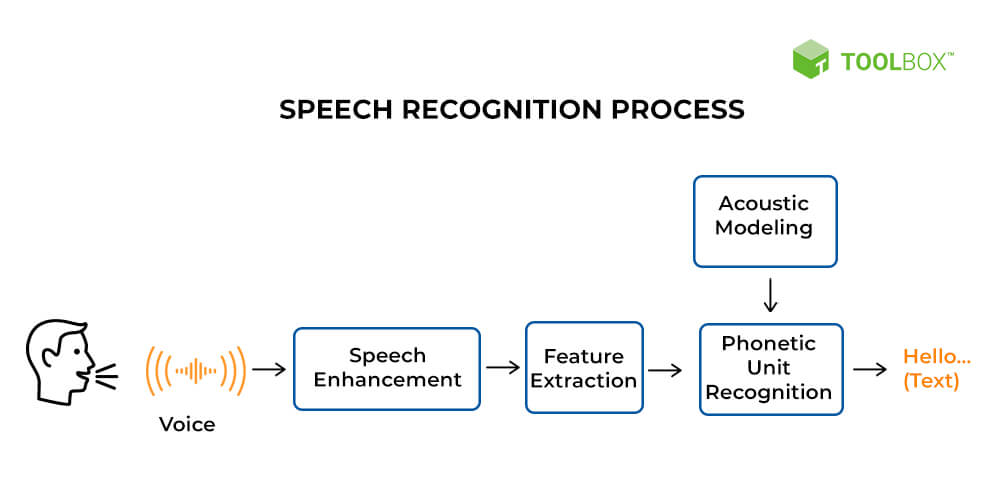

Automatic speech recognition (ASR)

As you can infer from the heading, the task expects an algorithm or system that is able to, first, differentiate human speech from background noise and other sounds and second, generate the corresponding text for the recognized speech. The task can be further divided into online and offline ASR, depending on the use case and the available resources.

There are plenty of use cases for online ASR systems. In fact, any real-time speech-to-text task, such as generating subtitles on the fly for live streams, can automatically generate the protocol of the judicial process in the court, assistance in contact centers, content moderation, and so on.

In addition, it could be also used in part of some pipelines to accomplish even more sophisticated tasks. Imagine having a platform that first applies an ASR technology to your voice input and then feeds the resulting text into the ChatGPT.

As a result, users would be able to quickly ask questions to ChatGPT without the need of typing them out. One can proceed even further and add a voice generation model at the end of this pipeline, which would take the ChatGPT's answer and generate a speech that the user would hear.

With this technology, people would be able to hold close to a real conversation with ChatGPT, allowing it to be used as the most modern voice assistant. But for such use cases, it is a big requirement that the ASR technology works in real-time; hence it should be computationally as lightweight as possible while maintaining high transcription accuracy.

The offline ASR, on the other hand, doesn't pose this strong speed requirement on the system, so these are generally more accurate while also being bulkier. Again, any speech-to-text task not requiring real-time performance is a use case for these systems, such as voice search, lyrics extraction from songs, subtitle generation for video files, etc.

That being said, we can understand that Whisper is mostly an offline automatic speech recognition technology, although, with a sufficiently powerful Graphics Processing Unit(GPU), it can achieve real-time performance if not applied to Eminem's "Rap God."

Automatic speech recognition technology: Mathematical modeling era

The history of automatic speech recognition dates back to 1952 when the famous Bell Labs Audrey was created. It was a very basic ASR system, capable of recognizing only numbers 0 to 9. Then for nearly 20 years, no significant breakthrough happened until the advent of a sophisticated yet very flexible statistical method called the Hidden Markov Models (HMM).

Researchers utilized those in combination with audio processing techniques targeting noise reduction to create the famous "trigram" model. Interestingly, due to their real-time speed and high accuracy, refined versions of the "trigram" model are the most used ASR technologies nowadays.

The deep learning era

In the past decade or two, neural networks' popularity has been growing exponentially, although theoretically, they were invented in the 1960s. This was due to the lack of computational resources to support the tremendous amount of parallel computations.

Deep learning models themselves are neural networks composed of multiple layers of artificial neurons stacked together according to some architecture. Their success started from AlexNet's huge accuracy score jump on the ImageNet image classification challenge and rapidly spread to the tasks of other domains.

The first attempts at using deep learning models for ASR aimed to improve the results of the "trigram" models by having better-spoken language feature extractors. So the whole ASR technology was a combination of a deep learning model, HMM, and some audio and text processing techniques.

However, the biggest drawback of these models was the fact that they were not end-to-end trainable, meaning that first, the deep learning model should be trained and only after that the HMM. As you can already guess, the next big step done by the researchers and developers was in the direction of end-to-end models, a representative of which is the Whisper model.

The Whisper

As progress was made in the direction of end-to-end training, the next blocker kicked in, which was the lack of labeled speech recognition data. The most hyped models of the previous year: Dall-E 2 and ChatGPT, were trained on large quantities of data.

In the case of ChatGPT, the data was so big that they used around 1000 GPUs for the training process, and the whole thing cost them around 4.8 million US dollars. While in the case of computer vision and natural language processing, such big datasets exist, in the speech recognition area researchers were not that lucky.

To address this issue, the Whisper was proposed by OpenAI. They argued that the public datasets do not suffice the needs of the automatic speech recognition models because researchers usually target a single task in a single language.

They proposed to perform multitask learning where the same model learns to do multiple tasks. And those tasks are typically comprised of speech detection, non-English transcription, English transcription, and any language-to-English translation.

The previous automatic speech recognition models solving only a single task of English transcription were limited to only English speech recognition datasets. Now Whisper adds similar datasets but in multiple languages.

Besides this, speech detection or any language-to-English translation data is pretty abundant, considering all the videos and movies available with English translations. Hence they got a training dataset of more than 680.000 hours of audio.

To imagine how this works, first, picture a usual deep learning model solving a single task as a black box receiving an input and yielding an output.

In the case of speech recognition, it receives a raw audio file, the Log Mel Spectrogram of it, which is a form of representation of the frequencies in the audio, then outputs the text that is spoken in the audio.

When we want the deep learning model to perform multiple tasks, we make branches in the model architecture — a single branch for each task.

And during the inference, with the help of special tokens given with the input audio, we tell the model the output of the branch we need.

As a result, the Whisper model can perform speech recognition in 100 languages of the world, as with each input, it first performs language identification and then translates the resulting text to English if prompted. Unlike ChatGPT, it is not deployed in some websites as a speech-to-text API, as the authors just released the code and pre-trained language models that can be found here.

Using the Whisper

The fact that only the model's codes are shared publicly narrows down the possible users to people having at least a basic understanding of Python.

Looking back for some 20 years, we see that back then, engineers coded every single piece of the math behind deep learning from scratch to train a simple classification model, but nowadays, it suffices to just import some library in Python and use its powerful modules.

Considering these trends of making artificial intelligence models as easy-to-use as possible for anyone, one will not wait long until this speech recognition technology is deployed somewhere with an easy-to-use user interface and experience.

To take a step in this direction, we at SuperAnnotate developed a collection of notebooks that can be run on-premises via Jupyter Notebooks or online via Google Colab.

Besides the notebook for Whisper, we also cover other tasks from computer vision and natural language processing domains, such as image classification, instance classification, object tracking, named entity recognition, and so on.

The Whisper notebook

The fact that the notebook can be run in Google Colab entirely makes it usable for non-tech people, too, as you just need to visit https://colab.research.google.com/ and import the notebook from GitHub, with this link.

To employ the model to your exact use case, you just need to set some variables in the notebook in clearly indicated places. For the purposes of the tutorial, we took a publicly available "US Elections 2020 - Presidential Debates" dataset.

The beauty of having the notebooks is that all the coding parts are done in notebook cells, and there is no need for users to have any coding skills. Instead, they just run the cells sequentially to satisfy their speech-to-text use case.

In their release, the authors of the Whisper provide pre-trained language models of different sizes, from tiny to large. As you can guess, the tiny is faster and less precise than the large one. We used the tiny one in the tutorial as it has enough accuracy to distinguish what the two presidential candidates speak.

SuperAnnotate and the pre-annotation use case

Proceeding with the tutorial, you will notice the last part of the notebook that uploads all the transcription chunks of the audio files (together with the corresponding start and end times of each chunk) to the SuperAnnotate platform.

This is done to target the specific use case of a user that wants to annotate new audio files in order to get a new ASR dataset. With the help of the Whisper, the raw audio file will be processed, and the predictions will be uploaded as pre-annotations to our platform. Then instead of annotating the whole file from scratch, the user will only need to make corrections, which will be significantly faster.

We hope that this will facilitate the creation of bigger and richer ASR datasets, posing even more complicated ASR challenges to the research community that will inevitably result in the creation of even more impressive ASR technologies.